Exploring Digital Information Technologies: Lecture 1- Part 2

Exploring Digital Information Technologies: Lecture 1- Part 2

The Landscape—Information and Computation

Encoding Digital Information

Encoding Digital Information

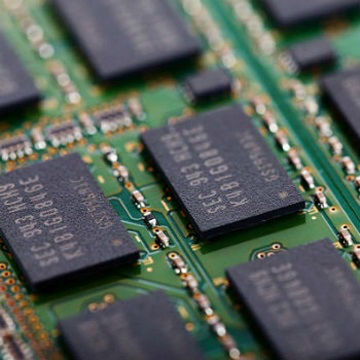

Picturecourtesy:https://pxhere.com/en/photo/1458883

Digital information is data encoded into symbols.

Most common form of digital data is the Binary Digit or Bit (it stores a teeny bit of information).

Most common form of digital data is the Binary Digit or Bit (it stores a teeny bit of information).

A bit can either be 0 or 1.

How is information coded?

How is information coded?

Question: What is your favorite color?

Answers: Purple, Red, Orange, Blue, Navy Blue, Brown

0,1,2,3,4,5

0,1,2,3,4,5

Encoding color information using numbers

Encoding color information using numbers

The answers are information about us.

Here are a few colors:

Out[]=

We can use a number to represent each color.

There are 9 colors, so the numbers 0-8 can be used:

There are 9 colors, so the numbers 0-8 can be used:

Out[]=

0,1,2,3,4,5,6,7,8

Encoding with bits

Encoding with bits

However with bits you have only 0 and 1 to encode all the information. Why just 0 and 1?

Information is Stored Physically

Information is Stored Physically

How do we store information?

How do we store information?

In a digital system, information is stored using physical quantities such as:

◼

voltage,

◼

crystal structure, or

◼

magnetic field.

Storing Information Physically

Storing Information Physically

Physical storage systems typically have two states: off or on OR which we can call 0 and 1.

That gives us a binary digit, or bit.

How do we measure information?

How do we measure information?

Information is measured by how many bits are needed to store it.

How is information coded into bits?

How is information coded into bits?

Out[]=

0,1,2,3,4,5,6,7,8

How many bits would we need to store 9 different numbers? Let’s start small

1 Bit

1 Bit

With 1 bit (0 or 1) we can store 2 numbers (2 pieces of information):

Out[]=

0 -> 0

1 -> 1

1 -> 1

2 Bits

2 Bits

With 2 bits we can store 4 numbers (or colors or names or 4 of anything):

Out[]=

Numbers

Numbers

00 ->

01 ->

10 ->

11 ->

0

01 ->

1

10 ->

2

11 ->

3

Colors

Colors

00 ->

01 ->

10 ->

11 ->

01 ->

10 ->

11 ->

Mickey and Friends

Mickey and Friends

00 -> Mickey

01 -> Minnie

10 -> Donald

11 -> Daisy

01 -> Minnie

10 -> Donald

11 -> Daisy

3 Bits and 4 Bits

3 Bits and 4 Bits

With 3 bits we can store 8 numbers:

Out[]=

000 -> 0

001 -> 1

010 -> 2

011 -> 3

100 -> 4

101 -> 5

110 -> 6

111 -> 7

001 -> 1

010 -> 2

011 -> 3

100 -> 4

101 -> 5

110 -> 6

111 -> 7

We need 1 more bit to get our 9th number in:

Out[]=

0000 -> 0

0001 -> 1

0010 -> 2

0011 -> 3

0100 -> 4

0101 -> 5

0110 -> 6

0111 -> 7

1000 -> 8

0001 -> 1

0010 -> 2

0011 -> 3

0100 -> 4

0101 -> 5

0110 -> 6

0111 -> 7

1000 -> 8

From Bits to Decimal Numbers and Back

From Bits to Decimal Numbers and Back

You don't have to do the math but you must understand how it works.

How many different things can we represent with “n” bits?

How many different things can we represent with “n” bits?

How many different things can 1 bit represent?

1 bit can be either a 0 or 1. So 2 things

How many different things can 2 bits represent?

With 2 bits we have 4 patterns: 00, 01, 10, 11

How many different things can 3 bits represent?

How many different things can 4 bits represent?

How many different things can 31 bits represent?

How many different things can n bits represent?

How many bits do we need to represent “n” different things?

How many bits do we need to represent “n” different things?

Mathematical formula to figure out how many bits would be needed to represent a specific decimal number:

To represent 4 different things:

To represent 9 different things:

To represent the 60 students in this class:

To represent the 8 billion people on the planet:

Encoding Images

Encoding Images

What do you see beyond zeros and ones?

What do you see beyond zeros and ones?

I have a matrix of 1s and 0s:

Let me lay it out nicely:

Let’s convert the bits into an image, 1s denoting white pixel, 0s denoting black:

Encoding colored images

Encoding colored images

What if I had a more colorful smiley?

Modern systems often use 24 bits to specify one color.

How many possible colors?

How many possible colors?

What to do with lots of bits?

What to do with lots of bits?

8 bits = 1 byte

How many bytes in 49,766,400 bits?

There are larger units for measuring bits:

How many kilobytes (kb)?

How many megabyte (mb)?

How much information can a couple of kilobytes store?

How much information can a couple of kilobytes store?

◼

A GIF may be around 800 KB.

◼

A JPEG may be around 100 KB.

◼

A short email may be around 5 KB.

◼

A PNG may be about 4.4 KB.

◼

A page of plain text may be about 2 KB.

Compression

Compression

Lots and lots of bits! Are all of them needed?

Lots and lots of bits! Are all of them needed?

Suppose one image, a frame from a lecture video requires 6,220,800 Bytes = 6.2 MB.

What about a one-hour recorded lecture with 24 images every second?

That is ~535 GB.

That's why we don't usually send videos through e-mail: it's a lot of information!

That's why we don't usually send videos through e-mail: it's a lot of information!

What do humans do?

What do humans do?

Human languages use a trick to reduce the effective size of ideas.

In particular, frequently used ideas use short words.

In particular, frequently used ideas use short words.

Uncommon ideas require long words.

What do modern technologies do?

What do modern technologies do?

Modern technologies use compression a lot. They use the idea that common ideas require fewer bits (like shorter words); usually a few GB is enough for a one-hour video.

Uncommon videos require more bits (like longer words).

What is Computation?

What is Computation?

The second key concept to be discussed in this class: computation

Given some information, answer a question, or make a decision.

Humans are good at such things. So are computers. Let's consider some examples.

Travel Directions

Travel Directions

The shortest distance between Champaign and Atlanta:

But you cannot drive down that straight line. So how about the shortest drive from Champaign to Atlanta:

How far do you have to drive?

How long will it take?

Identifying Images

Identifying Images

Can you identify what we see in these images?

Social Network Analysis

Social Network Analysis

Here is an example of a social network among a dolphin population:

We can computation to find communities within this social network, groups of dolphins that interact more with members of the group rather than members outside the group.

Church-Turing Hypothesis

Church-Turing Hypothesis

Alan Turing created the Turing Machine (1936) - which is defined as an abstract representation of a computing device.

Alonzo Church proposed the hypothesis (1936) - “Every computation that can be carried out in the real world can be effectively performed by a Turing Machine.”

So Church-Turing hypothesis in simple words: “Computers and humans can compute the same things”.

Terminology You Should Know from this Lecture

Terminology You Should Know from this Lecture

◼

information

◼

computation

◼

technology

◼

bit (BInary digiT) and Byte (8 bits)

◼

Kilo, Mega, Giga, Tera, (Peta, Exa)

◼

compression

Concepts You Should Know from this Lecture

Concepts You Should Know from this Lecture

◼

information is stored as bits (0s and 1s)

◼

information is stored as physical quantities: voltage or magnetic state

◼

how to find the number of bits needed to represent one thing from a group of things

◼

compression is used to reduce the number of bits needed for more common things in a group

◼

humans and computers are (in theory) equally capable of computation, given sufficient memory

and time (the Church-Turing hypothesis)

and time (the Church-Turing hypothesis)