LLMs Intro

LLMs Intro

Basics, Prompting and Using Tools

LLMs 101

LLMs 101

Background Article

Background Article

Interesting Open Questions

Interesting Open Questions

How do perturbations of input affect trajectories in embedding space?

Wolfram Summer School Project: Study the effect of small changes in large language models

How to quantitatively study phase transitions in the model outputs?

How to quantitatively study phase transitions in the model outputs?

Wolfram Summer School Project: Study of the possibility of phase transitions in LLMs

Predicting Next Word - Concrete Example

Predicting Next Word - Concrete Example

Sampling and Temperature - Concrete Example

Sampling and Temperature - Concrete Example

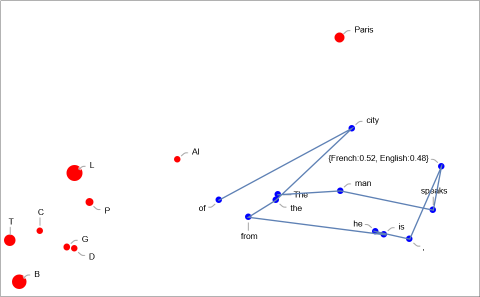

Embeddings - Concrete Example

Embeddings - Concrete Example

Prompting Techniques

Prompting Techniques

Other prompting techniques are in contrast to basic “in-out” where you look to generate a response given some prompt .

y~p(y|x)

x

Basic “in-out” prompting might look like this:

Consider a damped harmonic oscillator. After four cycles the amplitude of the oscillator has dropped to of its initial value. Find the ratio of the frequency of the damped oscillator to its natural frequency.

1/e

I just need to solve this problem, don't provide any explanation. Just immediately write down the correct ratio.

|

The ratio of the frequency of the damped oscillator to its natural frequency is approximately 0.924.

Interestingly, the last one is pretty close numerically, but incorrect. The others are completely off the mark.

In[]:=

8π

1+64

2

π

Out[]=

0.999209

There are a variety of terms which are used as shorthand for getting the LLM to engage in more sophisticated behaviors. I personally find it helpful to see these written in a more “formal” notation to understand the differences.

Some of the archetypal techniques out there

Some of the archetypal techniques out there

These first few are not an exhaustive list, but following Zhou et al (2023 preprint), they do make a nice baseline from which to understand further variations.

◼

Chain of Thought: Generate a response from where ~p(|x,,...,).

y~p(y|x,,...,)

z

1

z

n

z

k

z

k

z

1

z

k-1

◼

Proposed by Wei et al (2022 preprint)

◼

Tree of Thoughts: Create a tree of partial solutions where each partial solution can be evaluated based on heuristics (these evaluations typically come from the language model itself). Pick a search algorithm to explore the tree given the valuations.

s=[x,]

z

1..k

V(s)

◼

Proposed by Yao et al (2023 pre-print)

◼

◼

ReAct: Give the LLM some actions it can generate as well as thoughts ∈. Then introduce an environment so that each action generates an observation . Generate the final response from where ~p(|x,,...,,,...).

a

k

A

action

Z

naturallanguage

a

k

o

k

y~p(y|x,,...,,,...)

o

1

o

n

a

1

a

n

a

k

a

k

o

1

o

k-1

a

1

a

k-1

◼

Proposed by Yao et al (2022 preprint)

Chen et al (2023 preprint) also provide a good overview of the state of prompting.

Some variations on these:

Some variations on these:

There are dozens (if not hundreds) of subtle variations on the above ideas that can be found in the literature. Below are some I’ve selected because they can be quite general and useful.

◼

Chain of Thought with Self Consistency: Generate many different reasoning traces (either through sampling from the LLM or some other method) and then take the most often arrived-at conclusion (through something like majority voting or weighted averaging)

z

1...n

◼

Proposed by Wang et al (2022 preprint)

◼

Program-aided Language Models: Similar set-up to ReAct, except are statements in some programming language and the space of observations are running the programming language snippets.

A

action

◼

Proposed by Gao et al (2022 preprint)

◼

Progressive-Hint Prompting: Instead of taking the reasoning traces as-is, you use them as “solution hints” to iterative refine the traces until a stable conclusion is reached.

z

1...n

◼

Proposed by Zheng et al (2023 preprint)

A Picture Can Be Worth A Thousand Words

A Picture Can Be Worth A Thousand Words

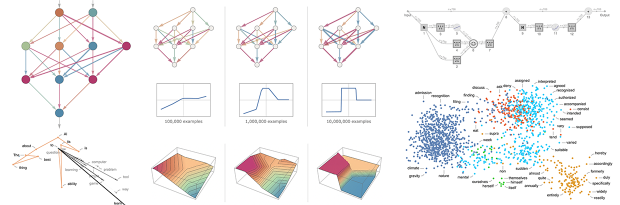

Besta et al (2024 preprint) is a quite recent review and classification of many techniques. They provide the following three very illustrative figures.

The topologies of various techniques:

A nice visual showing the various processing steps that are typically obscured from the end user:

Highlighting the functional nature of LLM pipelines: