5.7 Convolutional Neural Network

5.7 Convolutional Neural Network

5.7.1 Problems in Computer Vision

5.7.1 Problems in Computer Vision

In[]:=

session=StartExternalSession["Python"]

Out[]=

ExternalSessionObject

Test

In[]:=

5+6

Out[]=

11

In[]:=

MapImageIdentify[#]&,

,

,

,

Out[]=

,,,

In[]:=

c=

,

,

,

,

,

,

,

,

.

;

In[]:=

FindClusters[c,Method{"DBSCAN","NeighborsNumber"1.1}]//Column

Out[]=

, , , , , , , , . |

In[]:=

i=

;

In[]:=

u=ImageBoundingBoxes[i]

Out[]=

{Rectangle[{7.98709,26.7497},{124.229,196.438}]},{Rectangle[{128.687,35.4593},{294.344,222.035}]}

In[]:=

Head[u]

Out[]=

Association

In[]:=

z=u,u

Out[]=

{{Rectangle[{7.98709,26.7497},{124.229,196.438}]},{Rectangle[{128.687,35.4593},{294.344,222.035}]}}

In[]:=

HighlightImage[i,{Blue,z[[1]],Red,z[[2]]}]

Out[]=

5.7.2 Feature Extraction via AutoEncoder

5.7.2 Feature Extraction via AutoEncoder

In[]:=

persons=MapImageResize[#,{28,28}]&,

,

,

,

,

,

,

,

,

,

,

,

,

,

,

,

,

,

,

,

;

In[]:=

flowers=MapImageResize[#,{28,28}]&,

,

,

,

,

,

,

,

,

,

,

,

,

,

,

,

,

,

,

,

,

;

In[]:=

mixed=Join[persons,flowers];

In[]:=

Length[mixed]

Out[]=

41

In[]:=

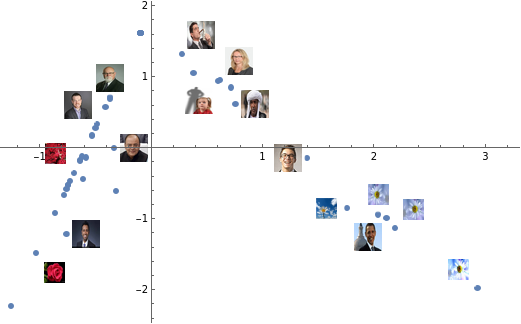

reduced=DimensionReduce[mixed,2,Method"AutoEncoder"];

In[]:=

reduced

Out[]=

{{-5.2981,-4.56879},{-8.49511,-9.12046},{-7.12285,-6.10615},{-6.74473,-6.84916},{-3.57499,-5.54336},{3.45959,-1.67064},{16.4924,-5.98312},{24.7008,-8.44718},{-3.31051,-7.35399},{-0.819933,-0.795893},{-3.9807,-3.45886},{-5.82417,-5.01201},{4.68297,-2.46364},{7.46948,-2.74976},{-5.469,-4.71277},{7.22588,-2.78286},{9.05098,-3.72038},{-3.94466,-3.52982},{-0.819933,-0.795893},{-8.29983,-7.09777},{-8.4925,-7.26009},{-4.44396,-3.84916},{-8.78373,-7.50545},{-11.6509,-9.92104},{-14.2561,-12.116},{-7.68745,-6.58183},{-6.8925,-5.91208},{-8.11164,-6.93921},{-8.29384,-7.09272},{8.58507,-3.04892},{34.1464,-11.3563},{-0.819933,-0.795893},{20.5725,-8.05383},{23.8254,-8.30778},{-0.819933,-0.795893},{25.5779,-8.87099},{-9.64672,-8.23254},{-6.49218,-5.94676},{-7.05887,-6.05225},{-5.88234,-5.06101},{-0.819933,-0.795893}}

In[]:=

r=Standardize[reduced]

Out[]=

{{-0.483559,0.331254},{-0.760158,-1.2166},{-0.641433,-0.191548},{-0.608719,-0.444218},{-0.334479,-0.000161473},{0.274137,1.31681},{1.40171,-0.14971},{2.11188,-0.987644},{-0.311598,-0.615893},{-0.0961182,1.61428},{-0.36958,0.708699},{-0.529074,0.180531},{0.379981,1.04714},{0.621064,0.949837},{-0.498346,0.28229},{0.599988,0.93858},{0.757892,0.619766},{-0.366463,0.684569},{-0.0961182,1.61428},{-0.743263,-0.528761},{-0.759932,-0.583959},{-0.409661,0.575971},{-0.785128,-0.667399},{-1.03319,-1.48885},{-1.25859,-2.23527},{-0.690281,-0.353308},{-0.621503,-0.12555},{-0.72698,-0.474841},{-0.742745,-0.527044},{0.717582,0.848105},{2.92909,-1.97693},{-0.0961182,1.61428},{1.75471,-0.853881},{2.03615,-0.94024},{-0.0961182,1.61428},{2.18776,-1.13177},{-0.859793,-0.914653},{-0.586868,-0.137344},{-0.635898,-0.173218},{-0.534106,0.163867},{-0.0961182,1.61428}}

In[]:=

Length[r]

Out[]=

41

In[]:=

ListPlot[MapThread[Labeled[#1,#2]&,{r,mixed}],PlotStylePointSize[0.01],PlotRangeAll]

Out[]=

5.7.4 Respective Fields

5.7.4 Respective Fields

5.7.5 Spatial Pooling

5.7.5 Spatial Pooling

5.7.7 Image Classification

5.7.7 Image Classification

5.7.8 Image Clustering

5.7.8 Image Clustering

5.7.9 Object Localization and Identification

5.7.9 Object Localization and Identification

https : // resources.wolframcloud.com/NeuralNetRepository/resources/YOLO - V3 - Trained - on - Open - Images - Data

5.7.10 Cat or Dog?

5.7.10 Cat or Dog?

In[]:=

# Importing the Keras libraries and packages

from keras.models import Sequential

from keras.layers import Conv2D

from keras.layers import MaxPooling2D

from keras.layers import Flatten

from keras.layers import Dense

from keras.models import Sequential

from keras.layers import Conv2D

from keras.layers import MaxPooling2D

from keras.layers import Flatten

from keras.layers import Dense

In[]:=

# Initialising the CNN

classifier = Sequential()

# Step 1 - Convolution

classifier.add(Conv2D(32, (3, 3), input_shape = (64, 64, 3), activation = 'relu'))

# Step 2 - Pooling

classifier.add(MaxPooling2D(pool_size = (2, 2)))

# Adding a second convolutional layer

classifier.add(Conv2D(32, (3, 3), activation = 'relu'))

classifier.add(MaxPooling2D(pool_size = (2, 2)))

# Step 3 - Flattening

classifier.add(Flatten())

# Step 4 - Full connection

classifier.add(Dense(units = 128, activation = 'relu'))

classifier.add(Dense(units = 1, activation = 'sigmoid'))

classifier = Sequential()

# Step 1 - Convolution

classifier.add(Conv2D(32, (3, 3), input_shape = (64, 64, 3), activation = 'relu'))

# Step 2 - Pooling

classifier.add(MaxPooling2D(pool_size = (2, 2)))

# Adding a second convolutional layer

classifier.add(Conv2D(32, (3, 3), activation = 'relu'))

classifier.add(MaxPooling2D(pool_size = (2, 2)))

# Step 3 - Flattening

classifier.add(Flatten())

# Step 4 - Full connection

classifier.add(Dense(units = 128, activation = 'relu'))

classifier.add(Dense(units = 1, activation = 'sigmoid'))

In[]:=

# Compiling the CNN

classifier.compile(optimizer = 'adam', loss = 'binary_crossentropy', metrics = ['accuracy'])

classifier.compile(optimizer = 'adam', loss = 'binary_crossentropy', metrics = ['accuracy'])

In[]:=

# Part 2 - Fitting the CNN to the images

from keras.preprocessing.image import ImageDataGenerator

train_datagen = ImageDataGenerator(rescale = 1./255,

shear_range = 0.2,

zoom_range = 0.2,

horizontal_flip = True)

test_datagen = ImageDataGenerator(rescale = 1./255)

training_set = train_datagen.flow_from_directory('M:/training_setA/training_set',

target_size = (64, 64),

batch_size = 32,

class_mode = 'binary')

test_set = test_datagen.flow_from_directory('M:/test_setA/test_set',

target_size = (64, 64),

batch_size = 32,

class_mode = 'binary')

from keras.preprocessing.image import ImageDataGenerator

train_datagen = ImageDataGenerator(rescale = 1./255,

shear_range = 0.2,

zoom_range = 0.2,

horizontal_flip = True)

test_datagen = ImageDataGenerator(rescale = 1./255)

training_set = train_datagen.flow_from_directory('M:/training_setA/training_set',

target_size = (64, 64),

batch_size = 32,

class_mode = 'binary')

test_set = test_datagen.flow_from_directory('M:/test_setA/test_set',

target_size = (64, 64),

batch_size = 32,

class_mode = 'binary')

Then let us train the network. The test set will be employed as validation set,

classifier.fit_generator(training_set,

steps_per_epoch = 94,

epochs = 5,

verbose=0,

validation_data = test_set,

validation_steps = 30)

steps_per_epoch = 94,

epochs = 5,

verbose=0,

validation_data = test_set,

validation_steps = 30)

score = classifier.predict_generator(training_set)

score

score

It means that the classification on the training set is perfect, 100 %.

Now let us check the test (validation) set.

Now let us check the test (validation) set.

score = classifier.predict_generator(test_set)

score

score

namely 80 % on the test (validation) set.

Since the test set was employed as validation set, let test our CNN on further test samples:

# Part 3 - Making new predictions

import numpy as np

from keras.preprocessing import image

test_image = image.load_img('M:/cat_3.jpg', target_size = (64, 64))

test_image = image.img_to_array(test_image)

test_image = np.expand_dims(test_image, axis = 0)

result = classifier.predict(test_image)

result

import numpy as np

from keras.preprocessing import image

test_image = image.load_img('M:/cat_3.jpg', target_size = (64, 64))

test_image = image.img_to_array(test_image)

test_image = np.expand_dims(test_image, axis = 0)

result = classifier.predict(test_image)

result

# Part 3 - Making new predictions

import numpy as np

from keras.preprocessing import image

test_image = image.load_img('M:/dog_1.jpg', target_size = (64, 64))

test_image = image.img_to_array(test_image)

test_image = np.expand_dims(test_image, axis = 0)

result = classifier.predict(test_image)

result

import numpy as np

from keras.preprocessing import image

test_image = image.load_img('M:/dog_1.jpg', target_size = (64, 64))

test_image = image.img_to_array(test_image)

test_image = np.expand_dims(test_image, axis = 0)

result = classifier.predict(test_image)

result