2.2 Logistic Regression

2.2 Logistic Regression

2.2.1 Iris Data Set

2.2.1 Iris Data Set

In[]:=

session=StartExternalSession["Python"]

Out[]=

ExternalSessionObject

Test

In[]:=

5+6

Out[]=

11

In[]:=

from sklearn import datasets

iris=datasets.load_iris()

iris=datasets.load_iris()

In[]:=

X=iris.data[:,[2,3]]

y=iris.target

y=iris.target

In[]:=

import numpy as np

In[]:=

np.savetxt('D:\\dataX.txt',X,fmt='%.2e')

In[]:=

from sklearn.linear_model import LogisticRegression

lr=LogisticRegression(C=100.0,random_state=1).fit(X,y)

lr=LogisticRegression(C=100.0,random_state=1).fit(X,y)

In[]:=

lr.predict(X[:150,:])

Out[]=

NumericArray

In[]:=

yP=Normal[%]

Out[]=

{0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,2,1,1,1,1,1,1,2,1,1,1,1,1,2,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,1,2,2,2,2,2,2,2,2,2,2,2,2,2,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2}

In[]:=

y

Out[]=

NumericArray

In[]:=

yTR=Normal[%]

Out[]=

{0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2}

In[]:=

Norm[yP-yTR]

Out[]=

6

In[]:=

print("Training set score: {:.2f}".format(lr.score(X, y)))

Training set score: 0.96

In[]:=

Xtrain=Import["D:\\dataX.txt","Table"];

In[]:=

dataT=MapThread[Join[#1,{#2}]&,{Xtrain,yTR}];

In[]:=

data0=Map[{#[[1]],#[[2]]}&,Select[dataT,#[[3]]0&]];data1=Map[{#[[1]],#[[2]]}&,Select[dataT,#[[3]]1&]];data2=Map[{#[[1]],#[[2]]}&,Select[dataT,#[[3]]2&]];

In[]:=

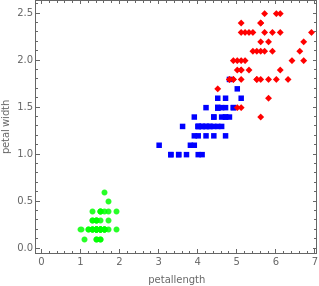

p0=ListPlot[{data0,data1,data2},PlotStyle{Green,Blue,Red},FrameTrue,AxesNone,PlotMarkers{Automatic},AspectRatio0.9,FrameLabel{"petallength","petal width"},FrameTrue]

Out[]=

In[]:=

dataTrain=Map[{#[[1]],#[[2]]}#[[3]]&,dataT];

In[]:=

c=Classify[dataTrain,Method"LogisticRegression",PerformanceGoal"Quality"];

Then the accuracy of the classifier on the training set

In[]:=

ctraining=ClassifierMeasurements[c,dataTrain]

Out[]=

ClassifierMeasurementsObject

In[]:=

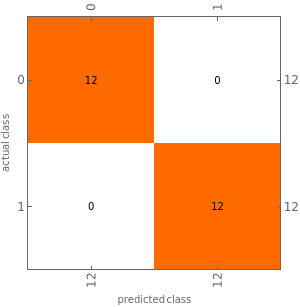

ctraining["Accuracy"]

Out[]=

1.

In[]:=

ctraining["ConfusionMatrixPlot"]

Out[]=

In[]:=

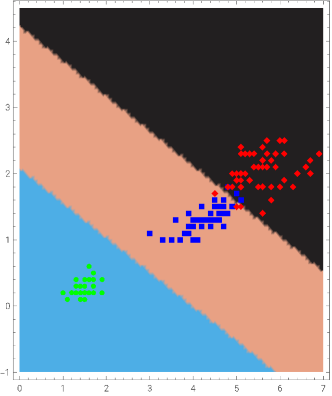

Show[{DensityPlot[c[{u,v}],{u,0,7},{v,-1,4.5},ColorFunction"CMYKColors",PlotPoints50],p0},AspectRatio1.2]

Out[]=

In[]:=

yP=Map[c[#]&,Join[{data0,data1,data2}]]//Flatten;

In[]:=

Norm[yTR-yP]

Out[]=

6

2.2.2 Digit Recognition

2.2.2 Digit Recognition

In[]:=

import numpy as np

from numpy import array, matrix

from scipy.io import mmread, mmwrite

from numpy import array, matrix

from scipy.io import mmread, mmwrite

In[]:=

X=mmread('Xtrain.mtx')

y=mmread('ytrain.mtx')

yy=y[0]

y=mmread('ytrain.mtx')

yy=y[0]

In[]:=

from sklearn.linear_model import LogisticRegression

lr=LogisticRegression(C=100.,random_state=1).fit(X,yy)

lr=LogisticRegression(C=100.,random_state=1).fit(X,yy)

In[]:=

ytr=lr.predict(X)

In[]:=

ytr

In[]:=

yy

In[]:=

print("Training set score: {:.2f}".format(lr.score(X, yy)))

In[]:=

Xt=mmread('Xtest.mtx')

yt=mmread('ytest.mtx')

yt

yt=mmread('ytest.mtx')

yt

In[]:=

yte=lr.predict(Xt)

In[]:=

yte

In[]:=

print("Test set score: {:.2f}".format(lr.score(Xt, yt[0])))