5.2 Multi Layer Perceptron

5.2 Multi Layer Perceptron

5.2.1 Multi Layer Perceptron Classifier

5.2.1 Multi Layer Perceptron Classifier

In[]:=

session=StartExternalSession["Python"]

Out[]=

ExternalSessionObject

Test

In[]:=

5+6

Out[]=

11

In[]:=

from numpy import array, matrix

from scipy.io import mmread, mmwrite

import numpy as np

from numpy import array, matrix

from scipy.io import mmread, mmwrite

import numpy as np

In[]:=

from sklearn.datasets import make_moons

X, y =make_moons(n_samples=100,noise=0.25,random_state=3)

X, y =make_moons(n_samples=100,noise=0.25,random_state=3)

In[]:=

mmwrite('pubi.mtx',X)

In[]:=

trainX=Import["pubi.mtx"];

In[]:=

y

Out[]=

NumericArray

In[]:=

Normal[%]

Out[]=

{1,1,0,1,1,1,1,0,0,0,1,0,1,1,0,1,1,0,1,0,1,0,0,0,1,1,0,1,0,1,0,0,0,0,1,0,1,1,0,0,0,0,0,0,1,1,1,1,1,0,1,1,1,1,0,1,1,1,1,1,0,1,1,1,0,1,0,0,0,0,0,1,1,0,1,1,0,1,1,0,1,0,1,0,0,0,0,0,0,1,0,0,1,0,0,0,1,1,0,0}

In[]:=

cluster=%;total=MapThread[{#1,#2}&,{trainX,cluster}];

In[]:=

clust1=Select[total,#[[2]]0&];

In[]:=

clust2=Select[total,#[[2]]1&];

In[]:=

pclust1=Map[#[[1]]&,clust1];

In[]:=

pclust2=Map[#[[1]]&,clust2];

In[]:=

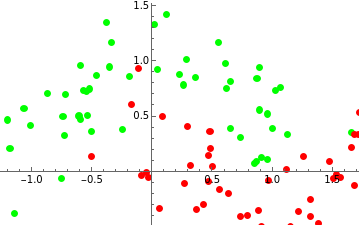

p0=Show[{ListPlot[pclust1,PlotStyle{Green,PointSize[0.017]}],ListPlot[pclust2,PlotStyle{Red,PointSize[0.017]}]}]

Out[]=

In[]:=

from sklearn.neural_network import MLPClassifier

import mglearn

import mglearn

In[]:=

mlp = MLPClassifier(solver='lbfgs', random_state=0).fit(X,y)

In[]:=

cupi=mlp.predict(X)

cupi

cupi

Out[]=

NumericArray

In[]:=

Normal[%]

Out[]=

{1,1,0,1,1,1,1,0,0,0,1,0,1,1,0,1,1,0,1,0,1,0,0,0,1,1,0,1,0,1,0,0,0,0,1,0,1,1,0,0,0,0,0,0,1,1,1,1,1,0,1,1,1,1,0,1,1,1,1,1,0,1,1,1,0,1,0,0,0,0,0,1,1,0,1,1,0,1,1,0,1,0,1,0,0,0,0,0,0,1,0,0,1,0,0,0,1,1,0,0}

In[]:=

zu=%;

In[]:=

zu-cluster

Out[]=

{0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0}

In[]:=

import matplotlib.pyplot as plt

In[]:=

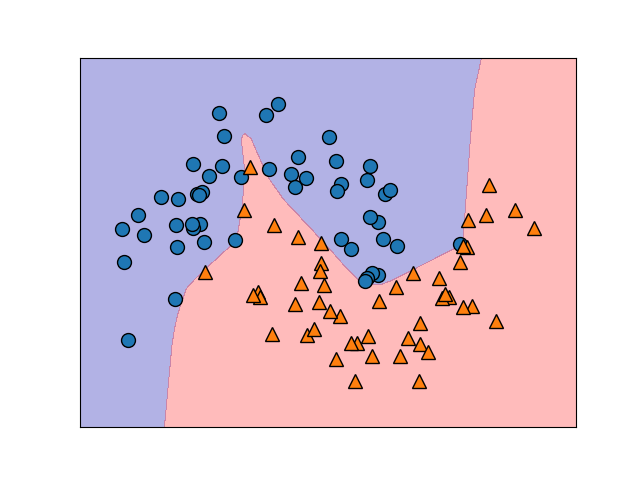

mglearn.plots.plot_2d_separator(mlp, X, fill=True, alpha=.3)

In[]:=

mglearn.discrete_scatter(X[:,0],X[:,1],y)

Out[]=

ExternalObject,ExternalObject

Running Python under Mathematica here gives an error message! No worries!

In[]:=

plt.show()

This Python command provide the figure in a separate window,

In[]:=

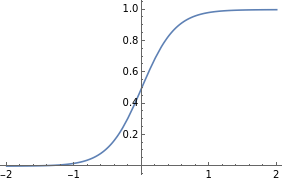

Plot[(Tanh[2x]+1)/2,{x,-2,2}]

Out[]=

In[]:=

myL=ElementwiseLayer[(Tanh[2#]+1)/2&]

Out[]=

ElementwiseLayer

In[]:=

net=NetInitialize@NetChain[{LinearLayer[15,"Input"2],myL,LinearLayer[15],ElementwiseLayer[Ramp],LinearLayer[1]},"Input"{2}]

5.2.2 Multi Layer Perceptron Regressor

5.2.2 Multi Layer Perceptron Regressor

In[]:=

from sklearn.neural_network import MLPRegressor

import numpy as np

import matplotlib.pyplot as plt

import random

import numpy as np

import matplotlib.pyplot as plt

import random

In[]:=

x=np.arange(0.,1.,0.01).reshape(-1,1)

In[]:=

y=np.sin(0.3*np.pi*x).ravel()+np.cos(3*np.pi*x*x).ravel()+np.random.normal(0,0.15,x.shape).ravel()

y

y

In[]:=

nn=MLPRegressor(hidden_layer_sizes=(12),activation='tanh',solver='lbfgs').fit(x,y)

In[]:=

test_x=np.arange(-0.01,1.02,0.01).reshape(-1,1)

test_y=nn.predict(test_x)

test_y=nn.predict(test_x)

In[]:=

fig = plt.figure()

In[]:=

ax1=fig.add_subplot(111)

In[]:=

ax1.scatter(x,y,s=5,c='b',marker="o",label='real')

In[]:=

ax1.plot(test_x,test_y,c='r',label='NN Prediction')

In[]:=

plt.show()