5.1 Single Layer Perceptron

5.1 Single Layer Perceptron

5.1.1 Single Layer Perceptron Classifier

5.1.1 Single Layer Perceptron Classifier

In[]:=

session=StartExternalSession["Python"]

Out[]=

ExternalSessionObject

Test

In[]:=

5+6

Out[]=

11

In[]:=

import numpy as np

class Perceptron(object):

"""Perceptron classifier.

Parameters

------------

eta : float

Learning rate (between 0.0 and 1.0)

n_iter : int

Passes over the training dataset.

random_state : int

Random number generator seed for random weight

initialization.

Attributes

-----------

w_ : 1d-array

Weights after fitting.

errors_ : list

Number of misclassifications (updates) in each epoch.

"""

def __init__(self, eta=0.01, n_iter=50, random_state=1):

self.eta = eta

self.n_iter = n_iter

self.random_state = random_state

def fit(self, X, y):

"""Fit training data.

Parameters

----------

X : {array-like}, shape = [n_samples, n_features]

Training vectors, where n_samples is the number of samples and

n_features is the number of features.

y : array-like, shape = [n_samples]

Target values.

Returns

-------

self : object

"""

rgen = np.random.RandomState(self.random_state)

self.w_ = rgen.normal(loc=0.0, scale=0.01, size=1 + X.shape[1])

self.errors_ = []

for _ in range(self.n_iter):

errors = 0

for xi, target in zip(X, y):

update = self.eta * (target - self.predict(xi))

self.w_[1:] += update * xi

self.w_[0] += update

errors += int(update != 0.0)

self.errors_.append(errors)

return self

def net_input(self, X):

"""Calculate net input"""

return np.dot(X, self.w_[1:]) + self.w_[0]

def predict(self, X):

"""Return class label after unit step"""

return np.where(self.net_input(X) >= 0.0, 1, -1)

class Perceptron(object):

"""Perceptron classifier.

Parameters

------------

eta : float

Learning rate (between 0.0 and 1.0)

n_iter : int

Passes over the training dataset.

random_state : int

Random number generator seed for random weight

initialization.

Attributes

-----------

w_ : 1d-array

Weights after fitting.

errors_ : list

Number of misclassifications (updates) in each epoch.

"""

def __init__(self, eta=0.01, n_iter=50, random_state=1):

self.eta = eta

self.n_iter = n_iter

self.random_state = random_state

def fit(self, X, y):

"""Fit training data.

Parameters

----------

X : {array-like}, shape = [n_samples, n_features]

Training vectors, where n_samples is the number of samples and

n_features is the number of features.

y : array-like, shape = [n_samples]

Target values.

Returns

-------

self : object

"""

rgen = np.random.RandomState(self.random_state)

self.w_ = rgen.normal(loc=0.0, scale=0.01, size=1 + X.shape[1])

self.errors_ = []

for _ in range(self.n_iter):

errors = 0

for xi, target in zip(X, y):

update = self.eta * (target - self.predict(xi))

self.w_[1:] += update * xi

self.w_[0] += update

errors += int(update != 0.0)

self.errors_.append(errors)

return self

def net_input(self, X):

"""Calculate net input"""

return np.dot(X, self.w_[1:]) + self.w_[0]

def predict(self, X):

"""Return class label after unit step"""

return np.where(self.net_input(X) >= 0.0, 1, -1)

In[]:=

import mglearn

import numpy as np

X, y = mglearn.datasets.make_forge()

np.savetxt('E:\\dataX.txt',X,fmt='%.5e')

import numpy as np

X, y = mglearn.datasets.make_forge()

np.savetxt('E:\\dataX.txt',X,fmt='%.5e')

In[]:=

y

Out[]=

NumericArray

In[]:=

Normal[%]

Out[]=

{1,0,1,0,0,1,1,0,1,1,1,1,0,0,1,1,1,0,0,1,0,0,0,0,1,0}

In[]:=

v=%;

In[]:=

z=Map[If[#0,-1,1]&,v]

Out[]=

{1,-1,1,-1,-1,1,1,-1,1,1,1,1,-1,-1,1,1,1,-1,-1,1,-1,-1,-1,-1,1,-1}

In[]:=

u=Import["E:\\dataX.txt","Table"];

In[]:=

uu=Standardize[u];

In[]:=

class1={};class2={};

In[]:=

MapThread[If[#1-1,AppendTo[class1,#2],AppendTo[class2,#2]]&,{z,uu}];

In[]:=

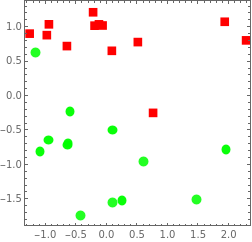

p0=ListPlot[{class1,class2},PlotStyle{Green,Red},FrameTrue,AxesNone,PlotMarkers{Automatic,Medium},AspectRatio1]

Out[]=

In[]:=

yy=[1,-1,1,-1,-1,1,1,-1,1,1,1,1,-1,-1,1,1,1,-1,-1,1,-1,-1,-1,-1,1,-1]

In[]:=

ppn=Perceptron(eta=0.5,n_iter=5000).fit(X,yy)

In[]:=

ppn.predict(X)

Out[]=

NumericArray

In[]:=

Normal[%]

Out[]=

{1,-1,1,-1,1,1,1,-1,1,1,1,1,-1,-1,1,1,1,-1,-1,1,-1,-1,-1,-1,1,-1}

In[]:=

zP=%;

In[]:=

zP-z

Out[]=

{0,0,0,0,2,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0}

In[]:=

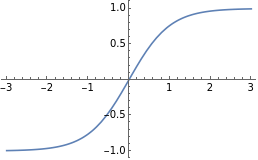

Plot[Tanh[x],{x,-3,3}]

Out[]=

In[]:=

myL=ElementwiseLayer[Tanh[#]&]

Out[]=

ElementwiseLayer

In[]:=

net=NetInitialize@NetChain[{LinearLayer[1,"Input"2,"Biases"-1],myL}]

Out[]=

NetChain

In[]:=

trainingData=MapThread[#2{#1}&,{z,uu}];

In[]:=

trained=NetTrain[net,trainingData,MaxTrainingRounds10000]

Out[]=

NetChain

In[]:=

zu=Map[trained[#]&,uu]//Flatten//Round

Out[]=

{1,-1,1,-1,0,1,1,-1,1,1,1,0,-1,-1,1,1,1,-1,-1,1,-1,-1,-1,-1,1,0}

In[]:=

zu-z

Out[]=

{0,0,0,0,1,0,0,0,0,0,0,-1,0,0,0,0,0,0,0,0,0,0,0,0,0,1}

In[]:=

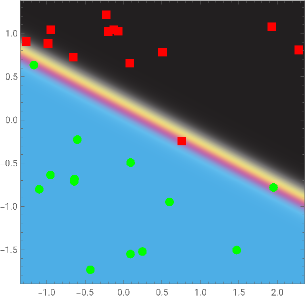

Show[{p0,DensityPlot[trained[{x,y}],{x,-1.4,2.4},{y,-2,1.5},PlotPoints250,ColorFunction"CMYKColors"],p0}]

Out[]=

In[]:=

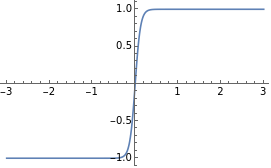

Plot[Tanh[8x],{x,-3,3}]

Out[]=

In[]:=

myL=ElementwiseLayer[Tanh[8#]&]

Out[]=

ElementwiseLayer