Siria Sadeddin

Face mask detection trained on ResNet - 50

Face mask detection trained on ResNet - 50

The use of face mask have become an important topic in recent days, automatic face mask detection can help to prevent the spread of COVID-19 in public spaces, detecting people who is not wearing a mask. Image classification using Convolutional Neural Networks and pretrained models have shown high performance on a broad of different problems, and more recently when detecting the use of face mask on a person. We will use a pretrained Convolutional Neural Network for identifying wether a person is using or not a face mask based on a cropped image of their face. For this project, ResNet-50 pretrained on ImageNet competition data has been joined to a 300 linear layer network and trained on a balanced dataset of 1000 face images. This model showed 99% accuracy on the validation data which consisted of about 800 face images.

Data Exploration

Data Exploration

We will use a data set containing 12000 cropped images of faces wearing mask and not wearing mask. The public data set is available here:

https://www.kaggle.com/ashishjangra27/face-mask-12k-images-dataset/activity.

This data set is well balanced with the following statistics:

https://www.kaggle.com/ashishjangra27/face-mask-12k-images-dataset/activity.

This data set is well balanced with the following statistics:

◼

Train: 10000 training images (5000 with mask/without mask )

◼

Test: ~1000 tests images (500 with mask/without mask )

◼

Validation: ~800 validation (400 with mask/without mask )

Each of this folders contain two subfolders:

◼

WithMask (images of faces wearing a mask)

◼

WithoutMask (images of faces not wearing a mask)

The images will look as follows:

In[]:=

Data load

Data load

In[]:=

dir="C:/Users/ECF0124A/Downloads/Face Mask Dataset";

We want to create separated datasets for train, test and validate the model. In order to do this, we wont load all the images (it is a lot of data), instead of that, we will provide the model the image files paths associated with their corresponding class.

For the data loading we will create a function named loadFiles, this function will create an association map between the files and the corresponding image class (WithMask or WithoutMask).

For the data loading we will create a function named loadFiles, this function will create an association map between the files and the corresponding image class (WithMask or WithoutMask).

In[]:=

loadFiles[dir_]:=Map[File[#]FileNameTake[#,{-2}]&,FileNames["*.png",dir,Infinity]];

Applying loadFiles function over the Train, Test and Validation folders we will obtain the association maps for each of this datasets:

In[]:=

trainingData=loadFiles[FileNameJoin[{dir,"Train"}]];testData=loadFiles[FileNameJoin[{dir,"Test"}]];valData=loadFiles[FileNameJoin[{dir,"Validation"}]];

View of some images

View of some images

Lets view some of the images inside the Validation folder using the ImageCollage function:

In[]:=

ImageCollage[Map[Import,RandomSample[FileNames["*.png",FileNameJoin[{dir,"Validation"}],Infinity],75]],Automatic,{600,200}]

Out[]=

We can see the dataset has cropped squared images of faces with different resolution and face position, we observe it has different people ethnicity, gender and ages (this is important when training models based on peoples images, we don’t want to have to have those kind of bias in our model ). It also includes some comics, this can give the model the possibility of classifying comics too.

Data distribution of classes (with mask/without mask) of train, test and validation sets

Data distribution of classes (with mask/without mask) of train, test and validation sets

We will check the data distribution of classes of each dataset, making sure the data is balanced as we expected it to be:

Train data distribution of classes

Train data distribution of classes

Out[]=

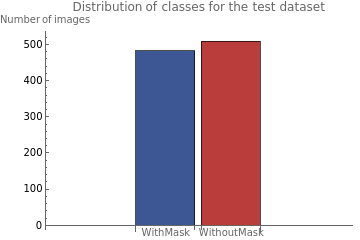

Test data distribution of classes

Test data distribution of classes

Out[]=

Validation data distribution of classes

Validation data distribution of classes

Out[]=

We were able to verify that the datasets are balanced for train, test and validation.

Data Randomization

Data Randomization

We make sure the data is randomized, in that way, each batch will have equally distributed classes and the learning process will be smooth. The function RandomSample will be used for this task.

In[]:=

trainingDataRandomized=trainingData//RandomSample;testDataRandomized=testData//RandomSample;valDataRandomized=valData//RandomSample;

Create the Neural Network

Create the Neural Network

We will start with a ResNet-50 pretrained model, this model was trained over a dataset called ImageNet http://www.image-net.org/ which contains more than 1 million images and 1000 different classes. The resulting model weights can be used for other computer vision tasks, allowing the new models to learn faster.

In[]:=

NetModel["ResNet-50 Trained on ImageNet Competition Data"]

Out[]=

NetChain

From this architecture we will need (in order to train a new model with a different number of dataset classes) to remove the last LinearLayer and SoftmaxLayer, those layers correspond to the ImageNet dataset number of classes. Later we will add our own customized layers corresponding to the number of classes in our dataset (2 for this specific task).

Pretrained model

Pretrained model

We first import the pretrained ResNet-50 model and remove the last 2 layers:

In[]:=

tempNet=Take[NetModel["ResNet-50 Trained on ImageNet Competition Data"],{1,-3}]

Out[]=

NetChain

Customized neural network

Customized neural network

The pretrained model ResNet-50 is called and the last 2 layers are removed, allowing the construction of a customized neural network and define the number of classes needed for this specific task.

After the convolutional pretrained ResNet-50 Dropout layers and LinearLayers are added. This allows the model learning new features apart of the transferred ones (the pretrained weights) and get a more complex architecture. Each of the new layers contribute as follows:

After the convolutional pretrained ResNet-50 Dropout layers and LinearLayers are added. This allows the model learning new features apart of the transferred ones (the pretrained weights) and get a more complex architecture. Each of the new layers contribute as follows:

◼

LinearLayer: Adds complexity to the neural network

◼

DropoutLayer: Prevents overfitting by randomly putting off the neurons while training

◼

SoftMaxLayer: Activation function layer for the classification task

In[]:=

newNet=NetChain[<|"pretrainedNet"tempNet,"Dropout1"->DropoutLayer[0.2],"linear"LinearLayer[300],"Dropout2"->DropoutLayer[0.2],"linear2"LinearLayer[2],"softmax"SoftmaxLayer[]|>,"Output"NetDecoder[{"Class",{"WithMask","WithoutMask"}}]]

Out[]=

NetChain

Train the model

Train the model

The model will be trained with a BatchSize of 16 and 2 rounds, as metric monitoring we use: “ConfusionMatrixPlot”,”Accuracy”,”Precision” and ”Recall”.

Net evaluation

Net evaluation

Different model evaluation metrics shows the performance of the model over the validation data:

Confusion matrix:

Confusion matrix:

ROC curve:

ROC curve:

Accuracy:

Accuracy:

F1 Score:

F1 Score:

Precision and recall:

Precision and recall:

Basic usage:

Basic usage:

Guess face mask use of a person from a photograph:

Example 1:

Example 1:

We will import one image of a person using a face mask from the validation set and apply the trainedNet over it:

Importing the image of a person with face mask:

Applying the trained net over the image:

Obtain the probabilities:

Example 2:

Example 2:

We will import one image of a person that is not using a mask from the validation set and apply the trainedNet over it:

Importing the image of a person without face mask :

Applying the trained net over the image:

Obtain the probabilities:

Applications

Applications

We will now test the model over images that are not included in any of the train, test or validation sets and show how the trained model can be used for any persons image, specially when the face or faces are not cropped.

Example 1:

Example 1:

This net was designed to work with cropped images of faces. If the photograph is not a cropped image of a face, the results may be unexpected:

Applying the trained net over the image:

Crop the photograph:

Guess the use of mask of a person from the cropped image:

Example 2:

Example 2:

We can use the trained model on pictures with more than one face, on the next example we have a picture with two people using a face mask, we first find the faces and crop them, then we use the function Map to apply the trainedNet over each of the faces:

Example 3:

Example 3:

Again, we use the trained model on pictures with more than one face, on the next example we have a picture with two people, one use the face mask and the other don’t, we first find the faces and crop them, then we use the function Map to apply the trainedNet over each of the faces:

Results

Results

We have seen how transfer learning with ResNet-50 architecture can be used to solve a computer vision task and recognize the use of face mask form an cropped image of a face. Training over a dataset of 10000 images we were capable of obtaining a model with 99% of precision and recall over the validation data set, we also tested the model over a few random images taken from the internet, we noticed however that the model can fail when the resolution of the image is low.